# End-to-end Testing

# What

# What is a test suite?

A test suite is a collection of full conversations (end-to-end testing) and unit tests. You can see it as your workspace for conducting different types of tests.

# What is a test dialog?

A test dialog is a set of test cases that are intended to test the flow of a conversation.

# What is a test case?

Each individual utterance/query that is used to test the robustness if the AI model is a test case. It is used to test the performance of classification, SVP, slot mapping or response separately or all at once.

# What is a test assertion?

In the context of Clinc AI platform, a test assertion is to check if a part of the AI pipeline (intent, slot, slot mapping or response) matches expected intent. A quick way to assert expected intents, slots, slot mappers and response values is through Autofill. You can also choose to manually assert when the value doesn't match your expectation.

# What is end-to-end testing in the Clinc AI Platform?

On the Clinc AI Platform, end-to-end testing is a practice to test the flow of conversations, within a competency and between competencies. The testing focus is not only if the query is classified into the right intent, slots are extracted, slots are mapped correctly and response is returned as expected, but also to see if the context is retained.

# How

How to create test dialogs and add test cases?

How to interpret full conversations test results?

# How to create a test suite?

Just like creating a new AI version when you start a new project, you would generally create a new test suite to test the robustness of an AI version too.

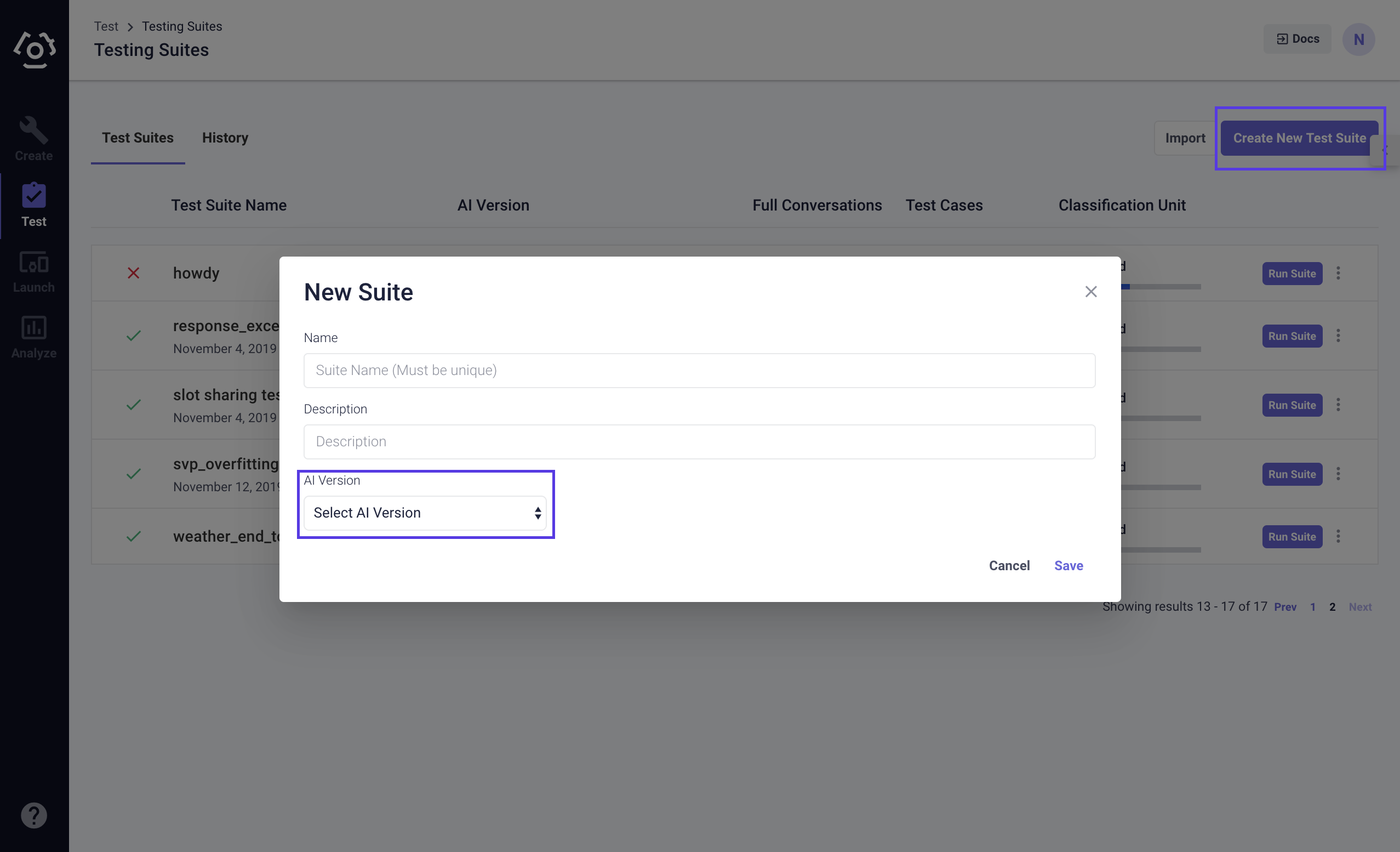

# Create a Test Suite

Creating a new test suite is similar to creating an AI version:

- Go to the Test tab on the navigation bar.

- Click Create New Test Suite on the upper right corner.

- Give the new test suite a name and description.

- Assign the test suite to the AI version you wan to test, then click Save.

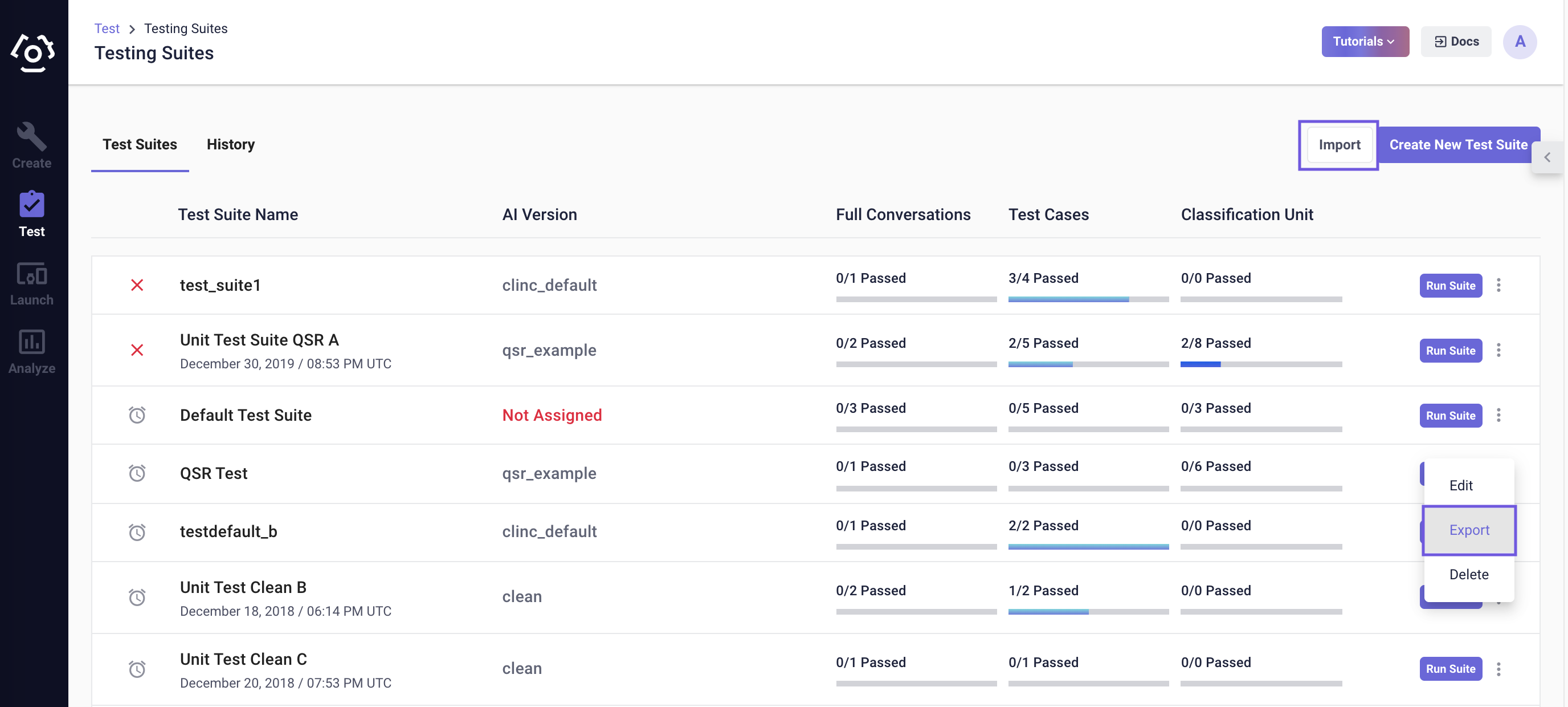

# Import/Export Test Suites

Similar to training data, you can import and export test data as well. On the test suites page, the Import button locates on the top of the page while Export lives inside the dropdown menu of each individual test suite.

The test suites can be exported to either a JSON or CSV file. Same with import, however, the need to be in one of the following formats:

JSON

{

"name": "QSR Test",

"description": "Testing qsr functionality",

"test_dialogs": [

{

"name": "root_to_ingredient_list",

"description": "root_to_ingredient_list",

"testcases": [

{

"query": "What's in a hamburger?",

"assertions": [

{

"assertion_type": "EQUAL",

"type": "CLF",

"slot": "",

"expected": "{\"intent\": [\"ingredients_list_start\"]}"

},

{

"assertion_type": "EQUAL",

"type": "SVP",

"slot": "_CALORIE_TOTAL_",

"expected": "{\"slots\": [\"354\"]}"

},

{

"assertion_type": "EQUAL",

"type": "SVP",

"slot": "_FOOD_MENU_",

"expected": "{\"slots\": [\"hamburger\"]}"

},

{

"assertion_type": "EQUAL",

"type": "RESP",

"slot": "",

"expected": "{\"visuals\": {\"formattedResponse\": \"The hamburger consists of ground beef patty, brioche bun, onions, lettuce, tomato, pickles, ketchup, mustard. The total calories for your meal so far is: 354.\"}}"

}

],

"extra_params": {}

}

]

}

],

"format_version": 5,

"clf_test_utterances": {

"food_order_start": [

"Need something to drink.",

"I want a burger!",

"I'm hungry!"

]

}

}

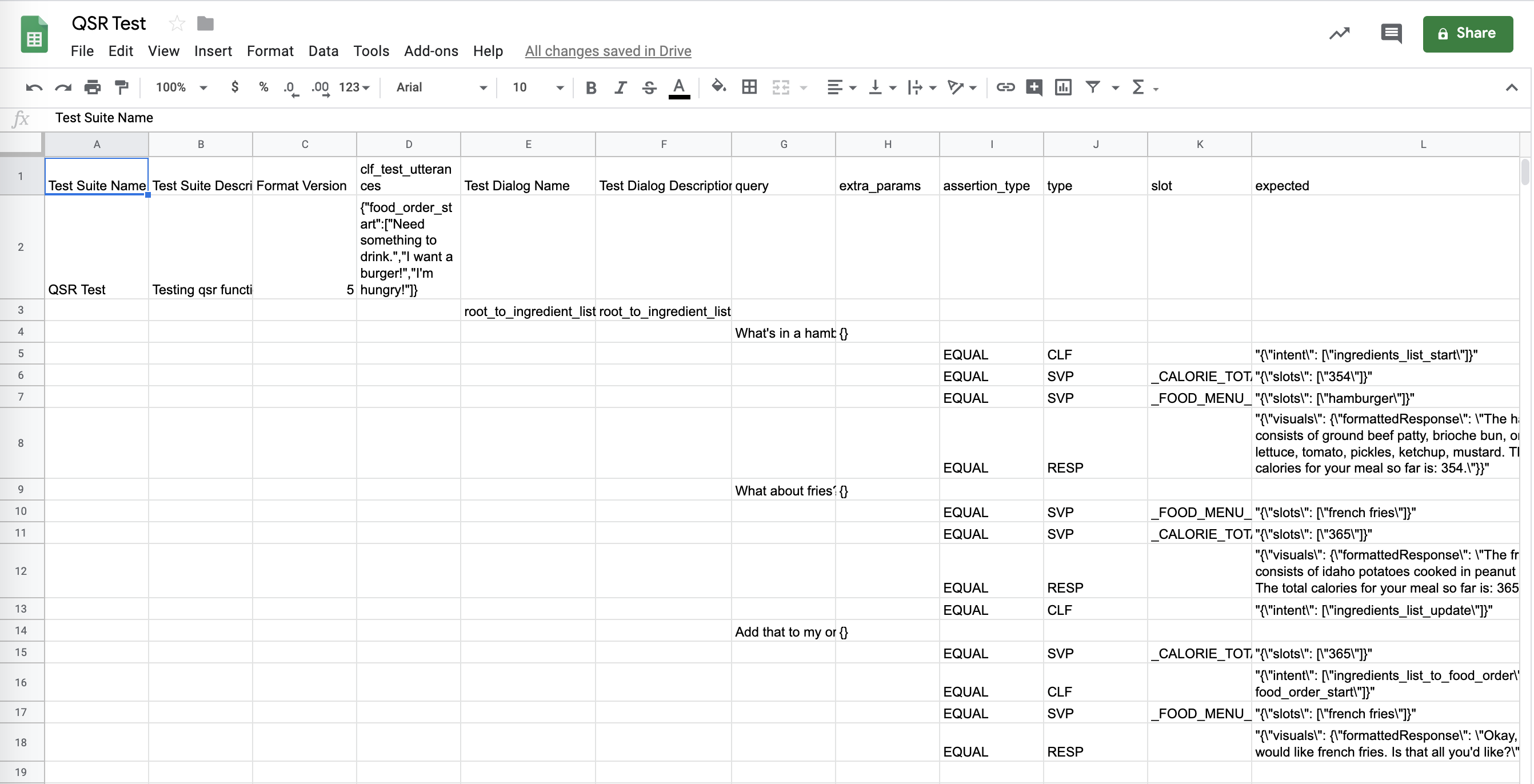

The JSON format mainly consists of a test_dialogs list and a classification unit tests dictionary. The former one includes all the test query, assertion type, slot(if applicable), and expected values exactly like what you have configured in the platform. An example of what goes into the extra_params dictionary can be user data, which allows the business logic to aggregate and provide fields to the response template. The latter shows classification unit test queries.

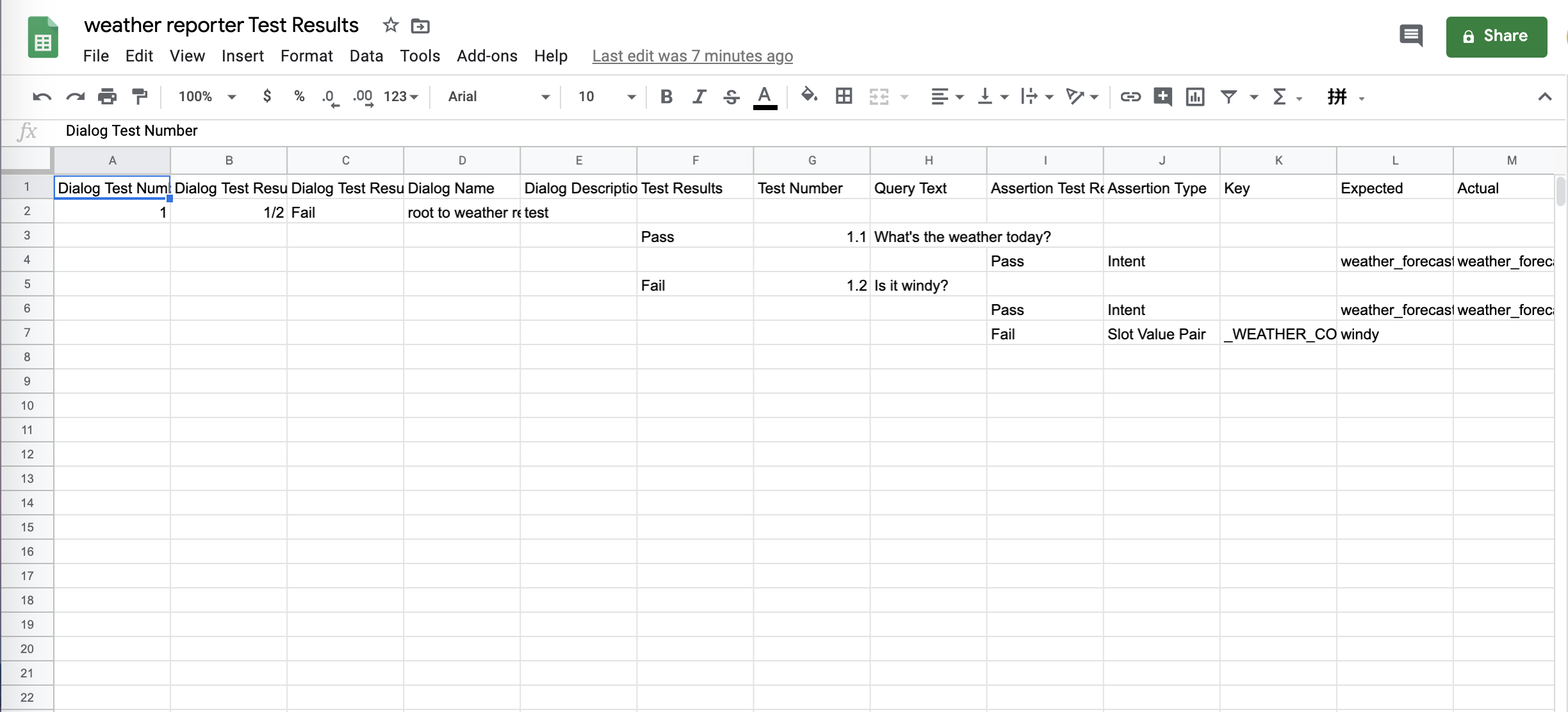

The CSV format provides the same information:

CSV

# How to create test dialogs and add test cases?

To access the Full Conversations Page

- Go to the Test tab on the navigation bar.

- Create a test suite or choose one that already exists.

- Make sure you are at the Full Conversations section.

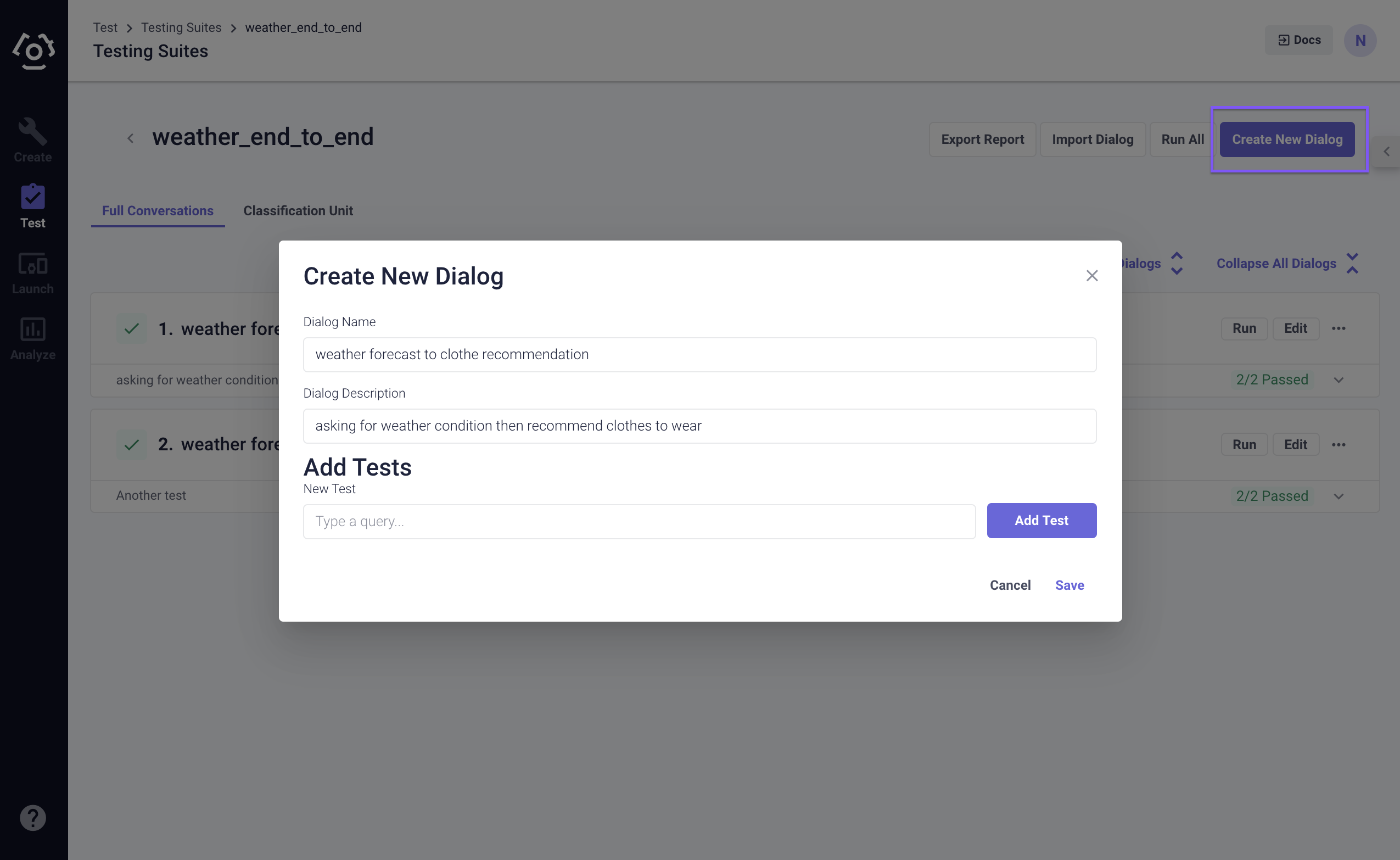

# Create a Test Dialog and Add Test Cases

A test dialog is one conversation flow that a user might interact with your AI version. If you don't already have created some dialogs:

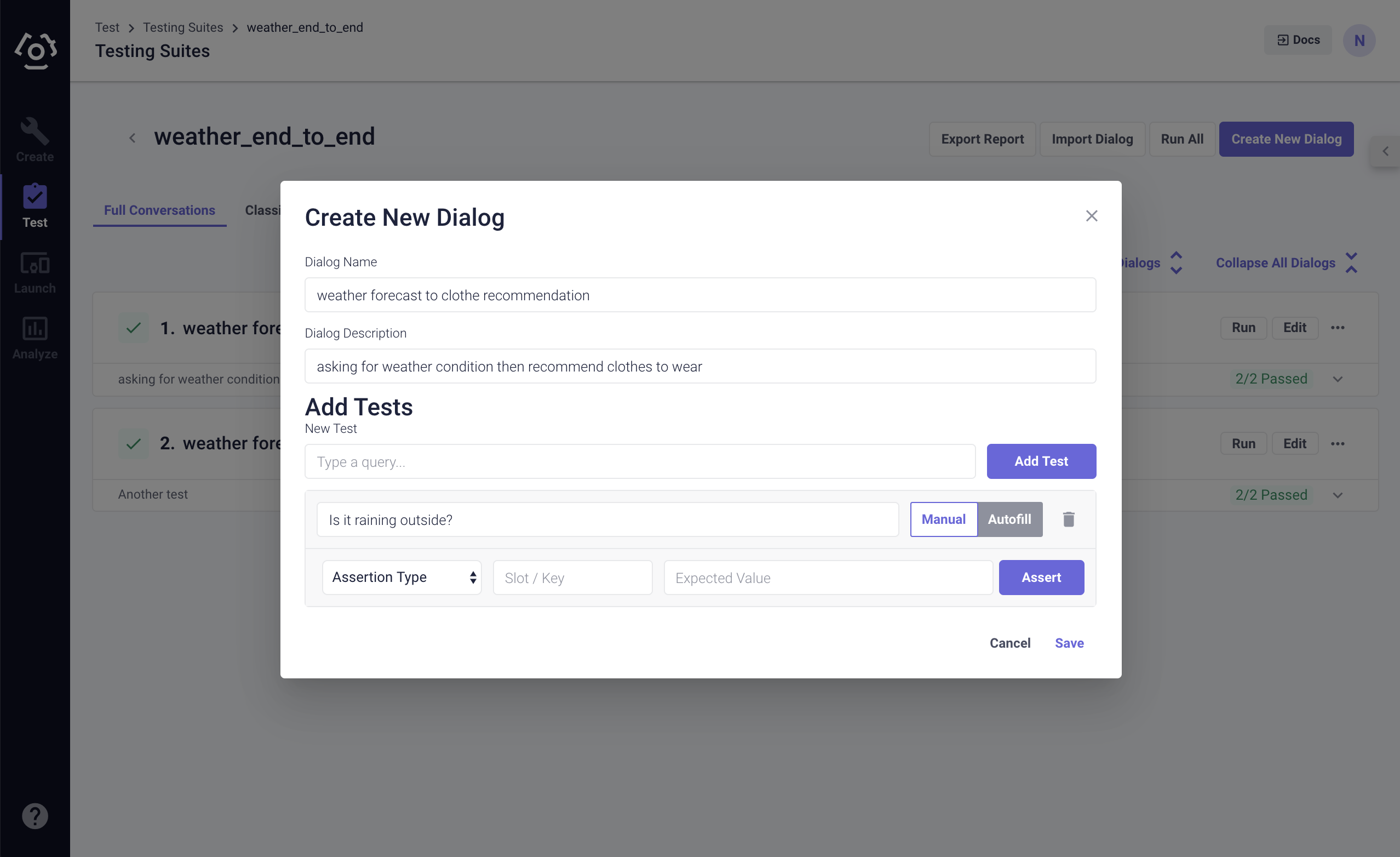

- Click Create New Dialog, give the dialog a name and description. It can be describing a conversation goes from one topic to another as long as it can remind you what it is about when you review it later.

- Add a test utterance into the type a query text field, then click Add Test.

Note: You should always separate testing utterances from training utterances.

- You will see the assertion modal being revealed. There are two types of assertion for you to use: Manually assert and autofill.

# Manual Assertion

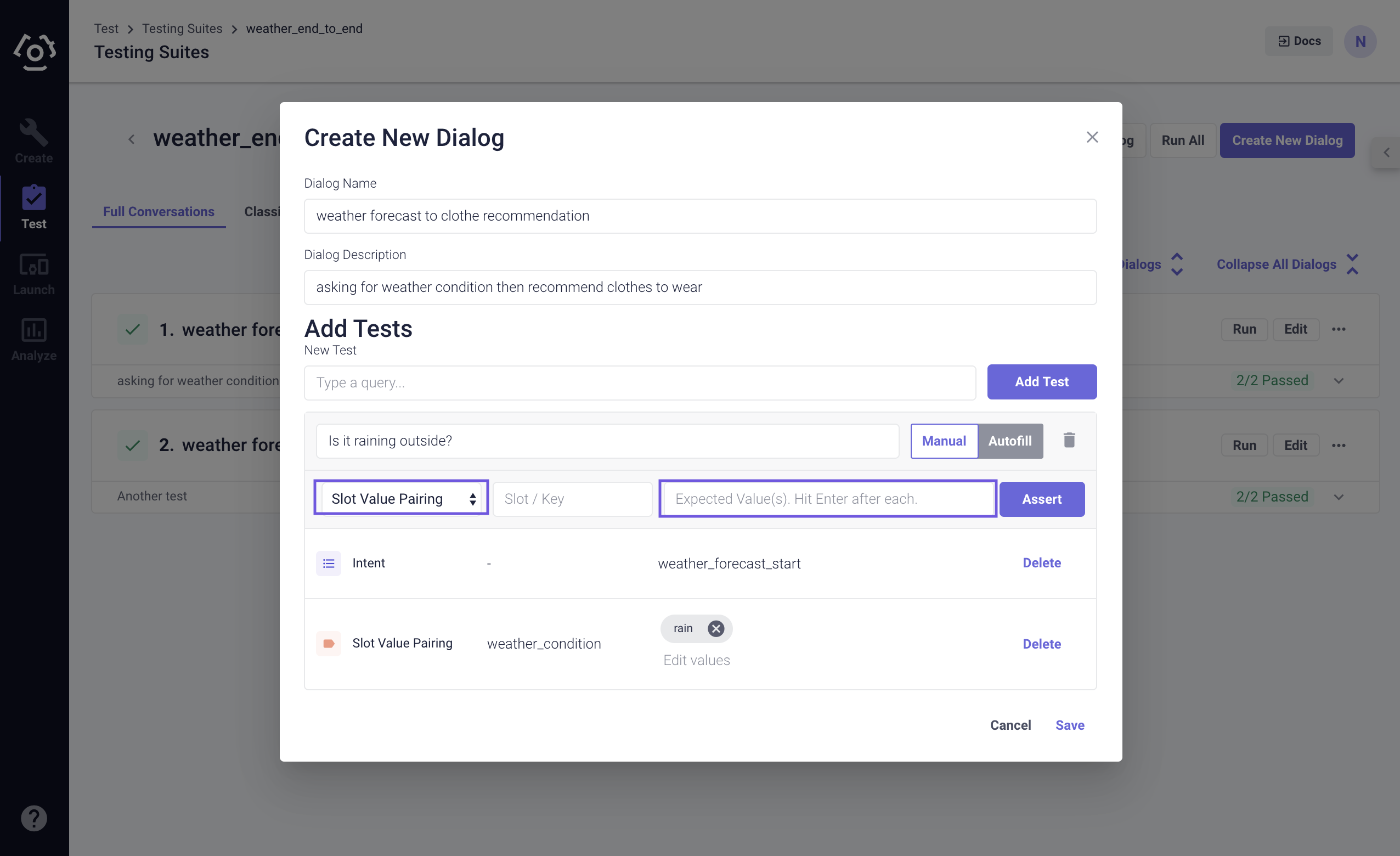

- When you toggle the switch to Manual, you can manually add assertions to your test utterance.

- Choose the Assertion Type: intent, slot, or response.

- Add the Expected Value (slot/key field is applicable to Slot-Value Pairing assertion), then click Assert.

# Autofill

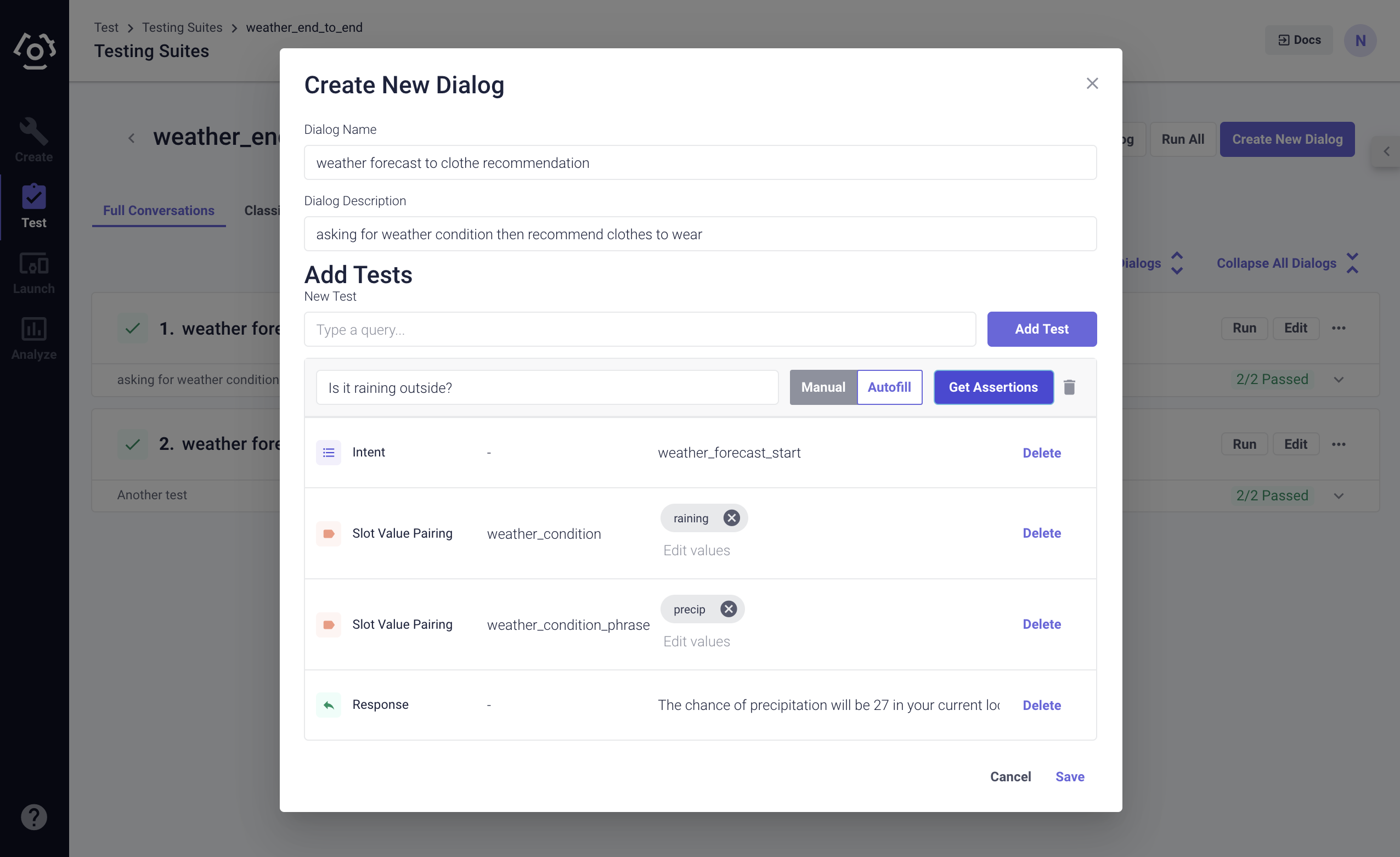

- Toggle the switch to Autofill, and click Get Assertions.

- The types of assertions it returns are the same with the manual assertion. Slot mapping is treated as a slot.

Note: We recommend to only include response once business logic and other components are configured properly. Otherwise, the test cases status will always show failed.

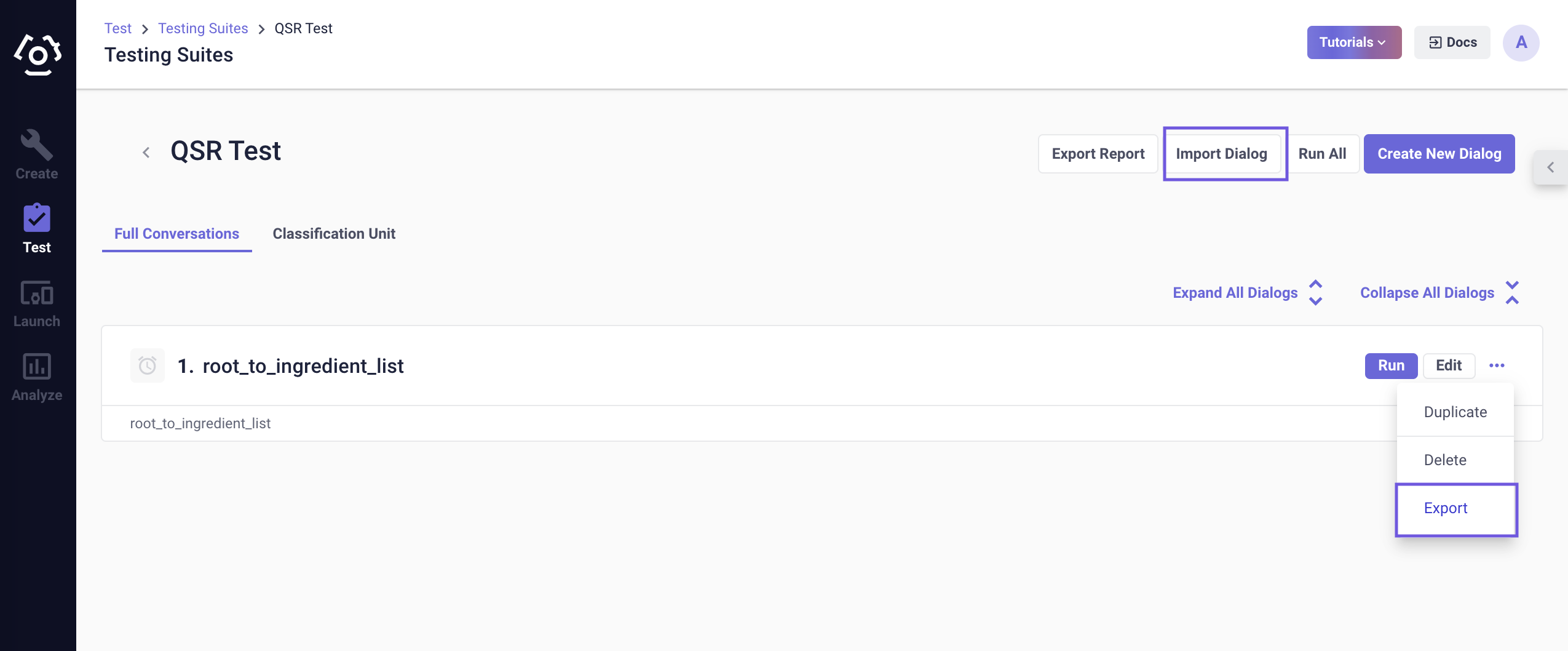

# Import/Export Test Dialogs

If you already have test dialogs that are applicable to this use case, you can repurpose the test data by importing test dialogs on the full conversations page. We have discussed the exact format in the previous section Import/Export Test Suites.

Once you have added all the test cases. You can hit Save and Run the test dialog.

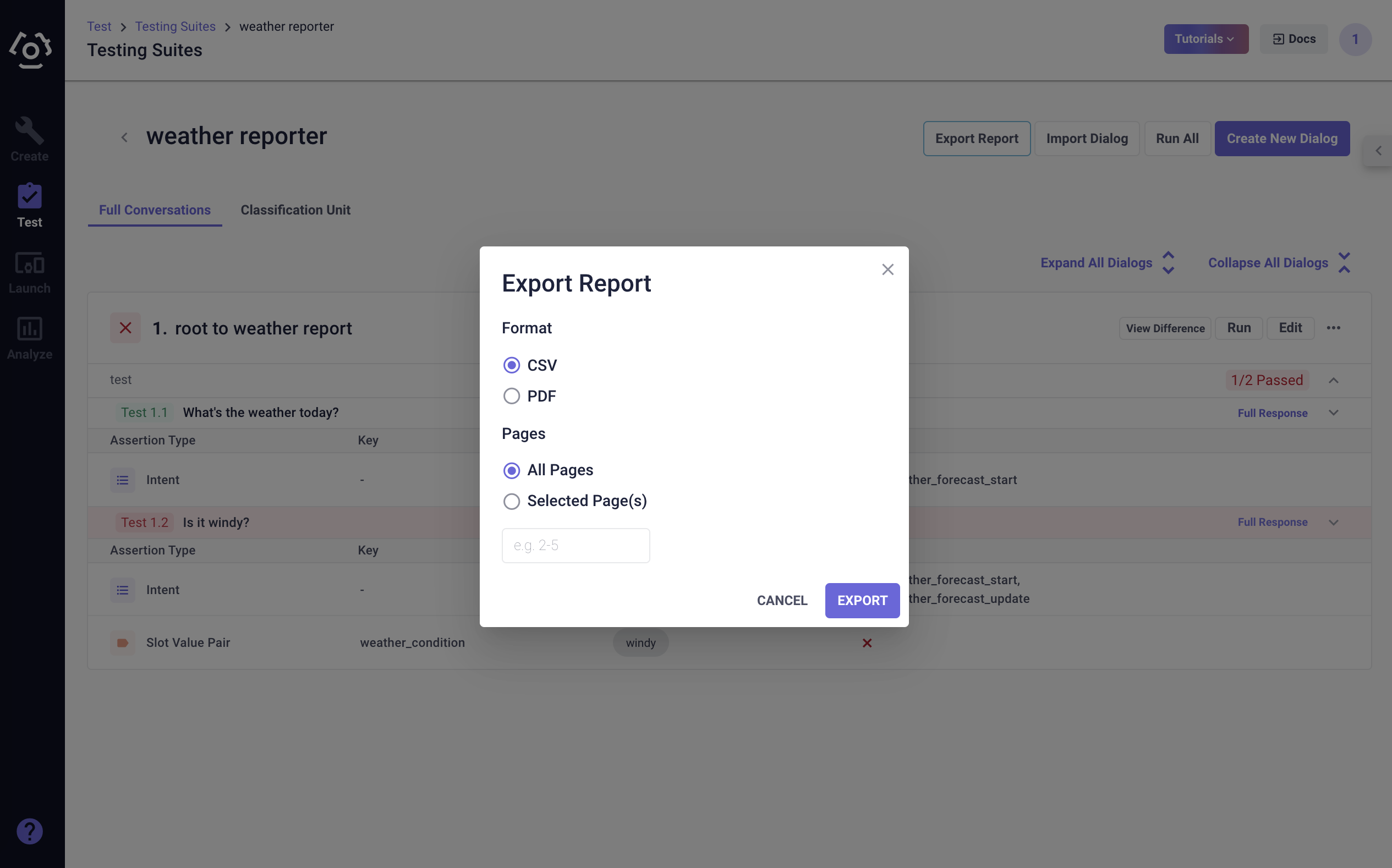

# Export Report

The Export Report allows you to export results of test dialogs to either PDF or CSV file.

The PDF format creates a portable representation of the tests in the platform. CSV file provides the same set of information but also AI version ID and name tested.

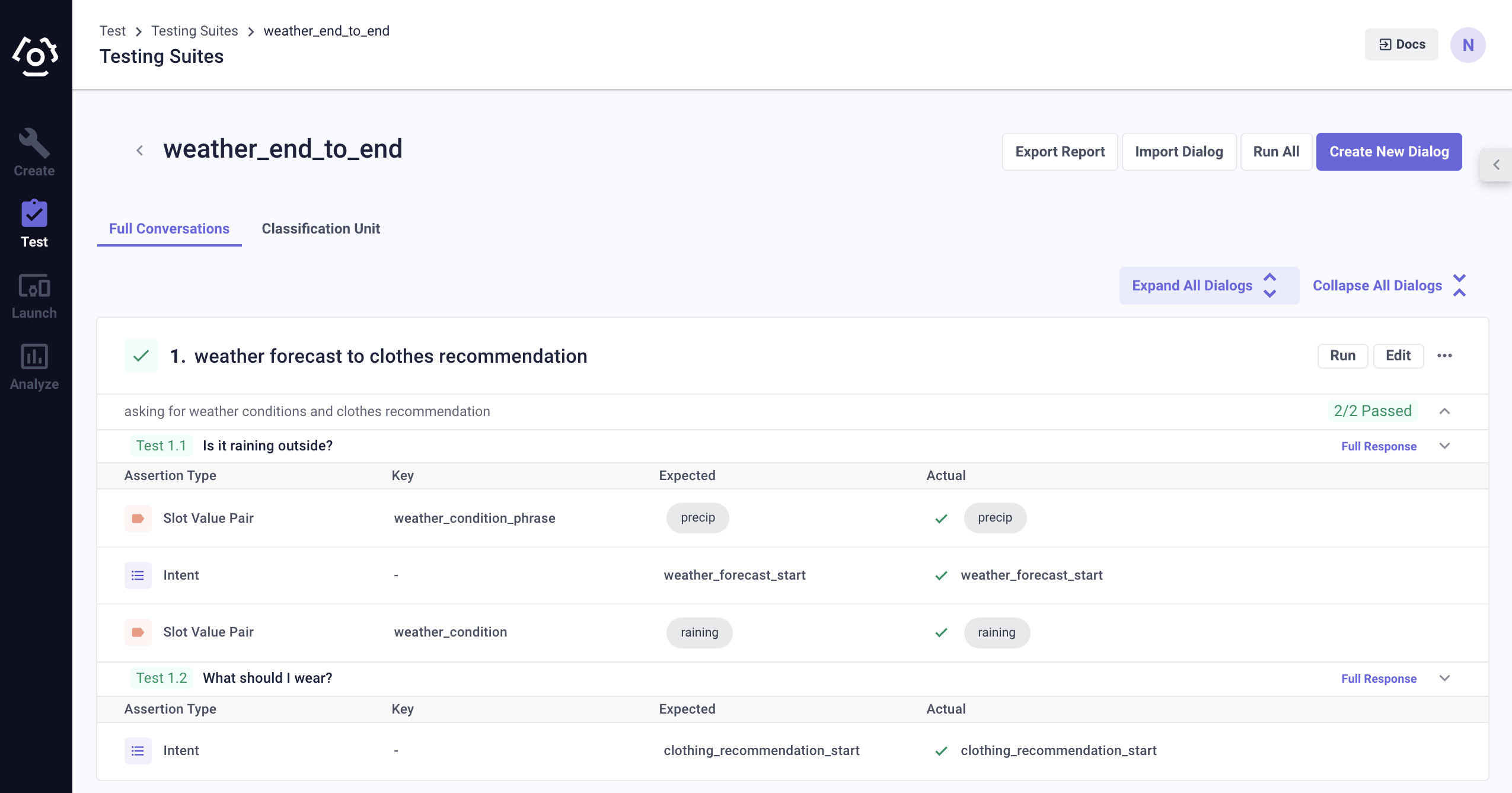

# How to interpret full conversations test results?

Interpreting end-to-end test results is fairly straightforward. Click Expand All to check the detailed result of each test case. It will tell you which part of the AI pipeline the test failed.

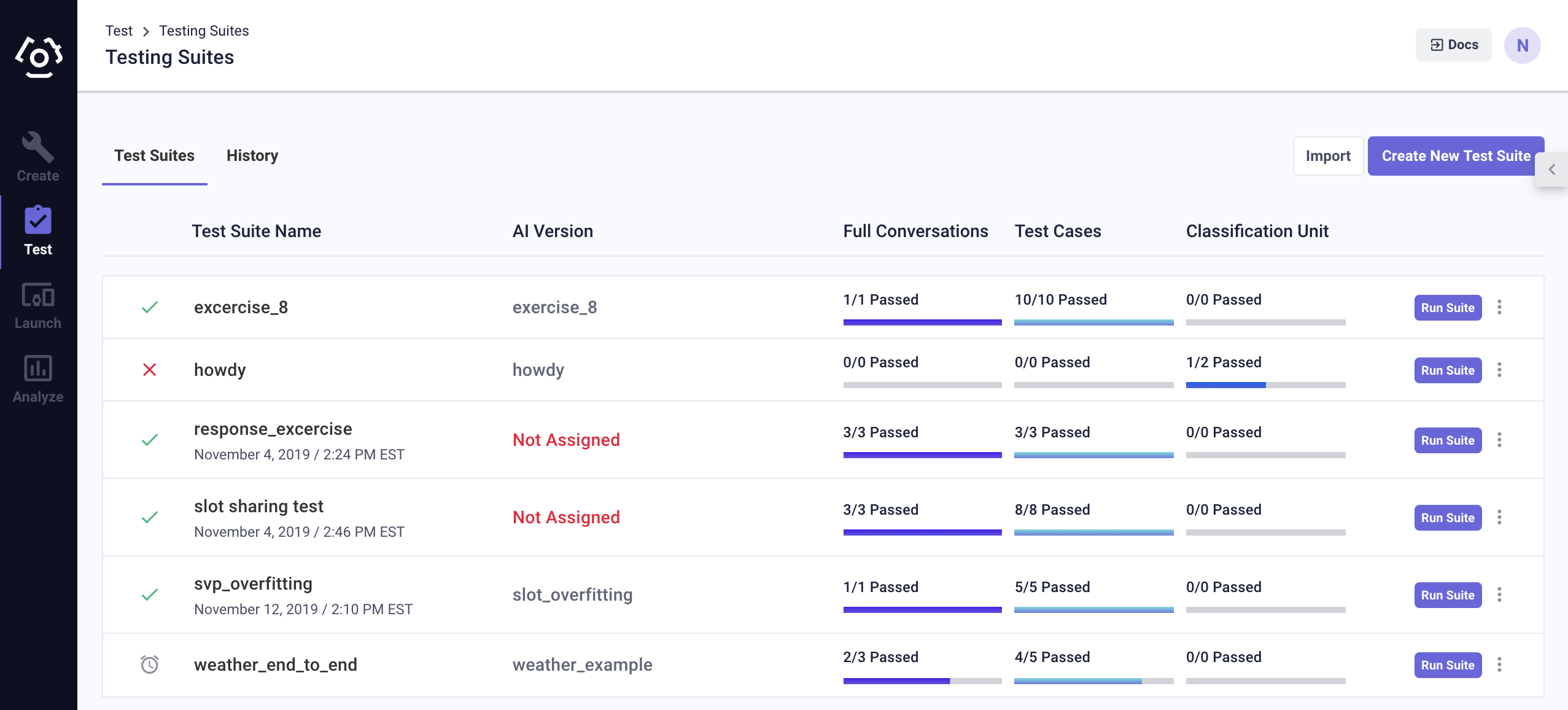

The testing suites page also provides an overview of the results. The percentage of test dialogs passed, the percentage of test cases passed and the classification unit tests passed inside the test suite.

or if you would like to watch a video that walks you through the features:

Last updated: 03/31/2020